revised December-January 2021

| CS 415, Section 002 | Sonoma State University | Spring, 2022 |

|

Algorithm Analysis

|

||

|

Instructor: Henry M. Walker

Lecturer, Sonoma State University | ||

Initial Notes:

The quicksort was originally devised by C. A. R. Hoare. For more details, see the Computing Journal, Volume 5 (1962), pages 10-15.

The basic approach involves applying an initial step that partitions the array into two pieces. The algorithm then is applied recursively to each of the two pieces. This approach of dividing processing into pieces and applying recursion to the two pieces turns out to be extremely general and powerful. Such an approach is called a divide and conquer algorithm.

Much of the following discussion is based on the presentation in Henry M. Walker, Pascal: Problem Solving and Structured Program Design, Little, Brown, and Company, 1987, pages 500-506, and is used with permission of the copyright holder.

The alternative loop invariant discussed below is based on Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivset, and Clifford Stein, Introduction to Algorithms, Third Edition The MIT Press, 2009, pages170-185.

The quicksort is a recursive approach to sorting, and we begin by outlining the principal recursive step. In this step, we make a guess at the value that should end up in the middle of the array. In particular, given the array a[0], ..., a[N-1] of data, arranged at random, then we might guess that the first data item a[0] often should end up in about the middle of the array when the array is finally ordered. (a[0] is easy to locate, and it is as good a guess at the median value as another.) This suggests the following steps:

A specific example is shown below:

With the above outline, we now consider how to move the first array element into its appropriate location in the array. There are two basic approaches, based on two different loop invariants:

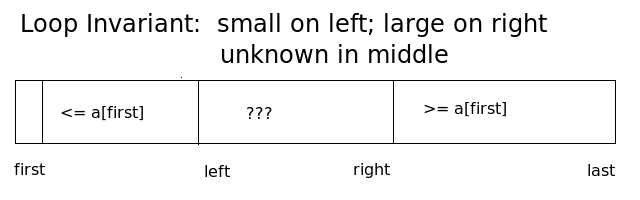

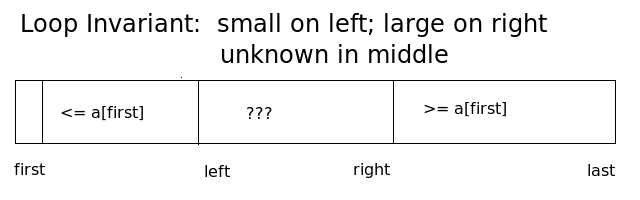

Approach 1:

In this approach, the idea is to maintain small elements at the start of the array, just after a[first] and to maintain large elements at the end of the array. Elements that have yet to be examined are in the middle. As processing proceeds, the middle section gets successively smaller, until all elements have been put into the correct section.

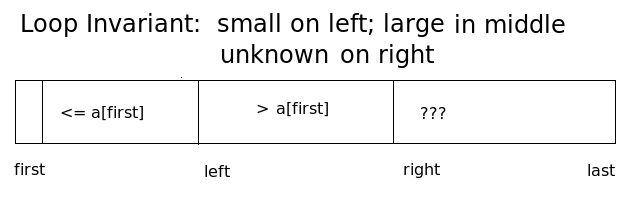

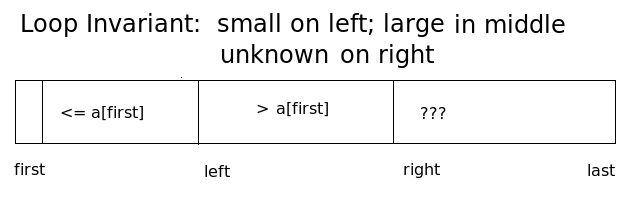

Approach 2:

In this approach, the idea is to maintain small elements and then large elements toward the start of the array. The right of the array contains elements that are yet to be examined. As processing proceeds, the right section shrinks until all items are in their appropriate section.

The basic approach is to work from the ends of the array toward the middle, comparing data elements to the first element and rearranging the array as necessary. This idea is encapsulated in the following diagram:, which is repeated from above.

To implement this approach, we move left and right toward the middle — maintaining the loop invariant with each step.. The details follow:

These steps are illustrated in the following diagram:

The following code implements this basic step:

int left=first+1;

int right=last;

int temp;

while (right >= left) {

// search left to find small array item

while ((right >= left) && (a[first] <= a[right]))

right--;

// search right to find large array item

while ((right >= left) && (a[first] >= a[left]))

left++;

// swap large left item and small right item, if needed

if (right > left) {

temp = a[left];

a[left] = a[right];

a[right] = temp;

}

}

// put a[first] in its place

temp = a[first];

a[first] = a[right];

a[right] = temp;

Given the above code to place the first element of an array segment appropriately and rearrange small and large items, the full array may be sorted by applying the algorithm recursively to the first part and the last part of the array. The base case of the recursion arises if there are no further elements in an array segment to sort.

This gives rise the the following code, called a quicksort.

public static void quicksort (int [] a) {

// method to sort using the quicksort

quicksortKernel (a, 0, a.length-1);

}

private static void quicksortKernel (int [] a, int first, int last) {

int left=first+1;

int right=last;

int temp;

while (right >= left) {

// search left to find small array item

while ((right >= left) && (a[first] <= a[right]))

right--;

// search right to find large array item

while ((right >= left) && (a[first] >= a[left]))

left++;

// swap large left item and small right item, if needed

if (right > left) {

temp = a[left];

a[left] = a[right];

a[right] = temp;

}

}

// put a[first] in its place

temp = a[first];

a[first] = a[right];

a[right] = temp;

// recursively apply algorithm to a[first]..a[right-1]

// and a[right+1]..a[last], provided these segments contain >= 2 items

if (first < right-1)

quicksortKernel (a, first, right-1);

if (right+1 < last)

quicksortKernel (a, right+1, last);

}

This code illustrates the husk-and-kernel programming style which arose frequently earlier in CSC 151.

In this approach, we compare successive items in the array to a[first].

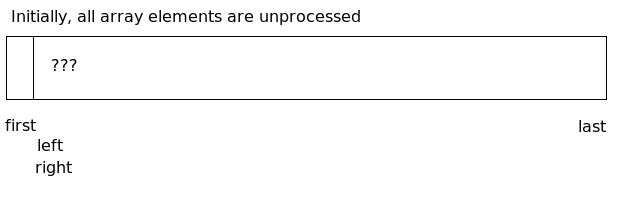

Examining this picture in more detail, at the start, there are no examined items, so there are no items that we know are <= a[first] and also no items that we know are > a[first], as shown in the following diagram:

This picture suggests the following initialization:

left = first + 1; right = first + 1;

Once processing has begun, we examine the unprocessed element a[right].

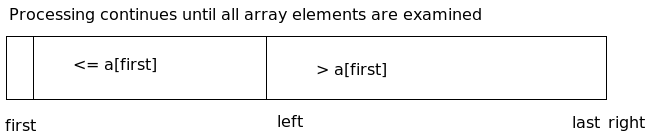

Processing continues until all items are processed, as shown in the following diagram:

Based on this outline, the entire code for placing a[first] in its correct position is:

// progress through array,

// moving small elements to a[first+1] .. a[left-1]

// and moving large elements to a[left] .. a[right-1]

while (right <= last)

{

if (a[first] < a[right])

right++;

else {

// swap a[left] and a[right]

temp = a[right];

a[right] = a[left];

a[left] = temp;

left++;

right++;

}

}

// put a[first] in its place

temp = a[first];

a[first] = a[left-1];

a[left-1] = temp;

This code fits into the overall framework quicksort procedure in much the same way as the code following approach 1 with its loop invariant (although you need to adjust the recursive calls to reflect the new loop invariant).

The quicksort is called a divide-and-conquer algorithm, because the first step normally divides the array into two pieces and the approach is applied recursively to each piece.

Suppose this code is applied an array containing n randomly ordered data. For the most part, we might expect that the quicksort's divide-and-conquer strategy will divide the array into two pieces, each of size n/2, after the first main step. Applying the algorithm to each half, in turn, will divide the array further -- roughly 4 pieces, each of size n/4. Continuing a third time, we would expect to get about 8 pieces, each of size n/8.

Applying this process i times, would would expect to get about 2i pieces, each of size n/2i.

This process continues until each array piece just has 1 element in it, so 1 = n/2i or 2i = n or i = log2 n. Thus, the total number of main steps for this algorithms should be about log2 n. For each main step, we must examine the various array elements to move the relevant first items of each array segment into their correct places, and this requires us to examine roughly n items.

Altogether, for random data, this suggests that the quicksort requires about log2 n main steps with n operations per main step. Combining these results, quicksort on random data has O(n log2 n).

A basic quicksort algorithm typically chooses the first or last element in an array segment as the point of reference for subsequent comparisons. (This array element is often called the pivot.) This choice works well when the array contains random datam and the work for this algorithm has O(n log n) with random data.

However, when the data in the array initially are in ascending order or descending order, the analysis of efficiency breaks down, and the quicksort has O(n2). (The analysis is much the same as insertion sort for data in descending order.)

To resolve this difficulty, it is common to select an element in the array segment at random to be the pivot. The first part of the quicksort algorithm then follows the following outline:

private static void quicksortKernet (int[] a, int first, int last)

{

pick an array element a[first], ..., a[last] at random

swap the selected array element with a[first]

continue with the quicksort algorithm as described earlier

...

}

With this adjustment, quicksort typically performs equally well (O(n log n)) for all types of data.

|

created December, 2021 revised December-January 2021 |

|

| For more information, please contact Henry M. Walker at walker@cs.grinnell.edu. |